Retinal Thickness May Be a Cognitive Biomarker

Abstract

Two longitudinal studies have provided some of the first evidence that people with thinner retinal nerve fibers have higher risks of future cognitive problems and dementia.

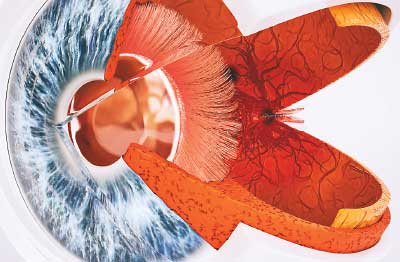

While an old proverb posits that eyes are windows to the soul, eyes are more technically windows to the brain. The retina—the rear portion of the eye—is a direct extension of the optic nerve, which is a component of the central nervous system.

The thickness of the retina’s outer layer of nerve fibers might indicate future risk of cognitive problems. That this region can be easily imaged with current tools suggests a possible biomarker for early detection of Alzheimer’s and other dementias.

Numerous studies over the past decades have suggested that declines in vision and memory, both common with age, may be linked. Most recently, a study published in June in JAMA Ophthalmology concluded that worsening vision and declining cognitive function appear to change over time together. Vision problems had a stronger influence on future memory problems than the other way around, though, suggesting vision loss might predict future cognitive loss. Can information within the eyes be used by researchers and clinicians to predict those most likely to experience cognitive decline?

Two studies published in June in JAMA Neurology suggest the answer to this question may be yes. Both studies pointed to retinal thickness as a potential biomarker for future cognitive decline, including Alzheimer’s disease.

Both studies involved the use of a tool known as optical coherence tomography (OCT), which is widely used by clinicians to diagnose eye disorders such as glaucoma or macular degeneration. OCT uses light energy to reconstruct a 3-D model of the inner eye (similar to how an ultrasound works).

In one of the studies, researchers at Erasmus University in the Netherlands looked directly at retinal changes and dementia. They assessed data from the Rotterdam Study, a large population-level aging study that has been monitoring older adults in the Rotterdam suburb of Ommoord since 1990. The participants received periodic eye exams and cognitive exams, allowing for a prospective analysis.

The investigators identified about 3,300 adults who had taken an OCT and were free of any eye diseases at baseline. Over about 4.5 years, 86 of these adults developed some clinical dementia, including 68 who developed Alzheimer’s disease. The investigators found that the adults who had thinner retinas at baseline—specifically the layer of nerve fibers where retina and optic nerve meet—were more likely to subsequently develop dementia. When participants were divided into four groups based on retinal thickness, for example, those in the lowest quartile were more than two and a half times as likely to develop dementia as those in the highest quartile.

For the other study, researchers at University College London analyzed the retinal nerve layer thickness and changes in cognitive performance in a group of adults aged 40 to 69, irrespective of cognitive disease. As with the Dutch group, the investigators made use of data available from a large aging study known as UK Biobank. The researchers examined clinical data from over 30,000 individuals who were both given an OCT scan and took multiple cognitive tests to measure their memory, reaction time, and reasoning skills every three years.

The U.K. investigators stratified the participants into five groups (quintiles) based on retinal thickness. They found that compared with participants in the highest retinal quintile at baseline, participants in the second and third quintiles were about 1.5 times as likely to perform worse on at least one cognitive test after three years. Participants in the lowest two quintiles were about twice as likely to perform worse on follow-up cognitive tests.

“Our study strengthens the argument of an association between neurodegenerative processes that affect the brain and the eye and indicates that OCT measurement of the [retinal nerve fiber layer] is a potential noninvasive, relatively low-cost, and time-efficient screening test for early cognitive changes,” wrote lead author Paul Foster, Ph.D., and colleagues.

“However, one must be careful in [OCT] interpretation so as to avoid an unnecessary psychological burden for people who may not ultimately experience cognitive decline,” the authors continued. “Further, attempting to risk-stratify people would be most appropriate if there is a viable treatment or preventative measure available. Additional research is required to define a possible role for these observations in health policies and to determine the relevance at an individual level.” ■

“Association of Retinal Nerve Fiber Layer Thinning With Current and Future Cognitive Decline” can be accessed here. “Association of Retinal Neurodegeneration on Optical Coherence Tomography With Dementia” is available here. “Longitudinal Associations Between Visual Impairment and Cognitive Functioning: The Salisbury Eye Evaluation Study” is located here.